Journal of Intelligent Agricultural Mechanization ›› 2024, Vol. 5 ›› Issue (4): 51-65.DOI: 10.12398/j.issn.2096-7217.2024.04.004

Previous Articles Next Articles

MA Zenghong1,2,3( ), YUE Jiawen1,2,3, YIN Cheng1,2,3, ZHAO Runmao1,2,3, CHANDA Mulongoti1,2,3, DU Xiaoqiang1,2,3(

), YUE Jiawen1,2,3, YIN Cheng1,2,3, ZHAO Runmao1,2,3, CHANDA Mulongoti1,2,3, DU Xiaoqiang1,2,3( )

)

Received:2023-10-25

Revised:2023-12-29

Online:2024-11-15

Published:2024-11-15

Corresponding author:

DU Xiaoqiang

About author:MA Zenghong, PhD, Associate Professor, research interests: agricultural machinery navigation and unmanned driving. E-mail: mzh2018@zstu.edu.cn

Supported by:CLC Number:

MA Zenghong, YUE Jiawen, YIN Cheng, ZHAO Runmao, CHANDA Mulongoti, DU Xiaoqiang. Visual navigation in orchard based on multiple images at different shooting angles[J]. Journal of Intelligent Agricultural Mechanization, 2024, 5(4): 51-65.

Add to citation manager EndNote|Ris|BibTeX

URL: http://znhnyzbxb.niam.com.cn/EN/10.12398/j.issn.2096-7217.2024.04.004

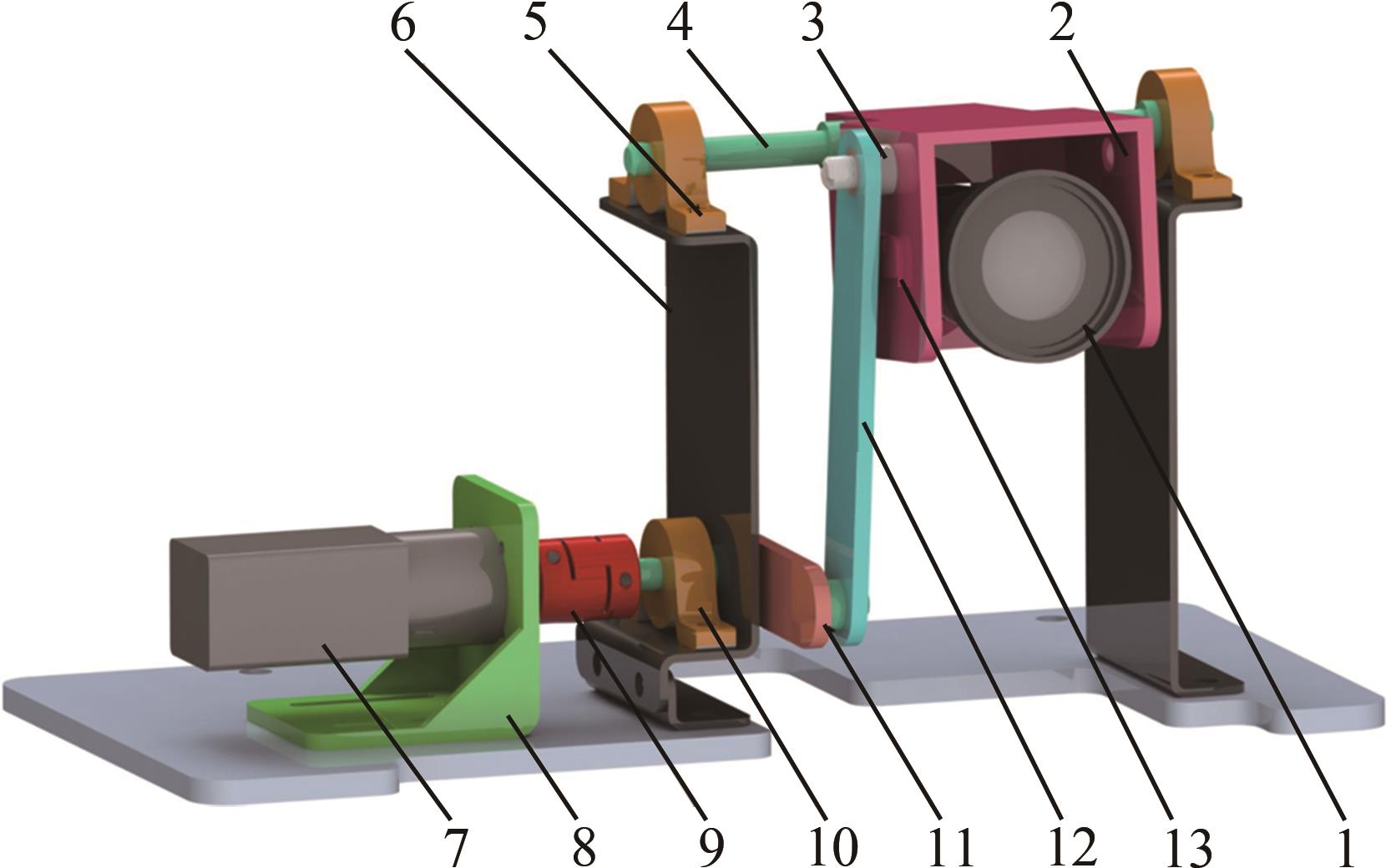

Figure 2 Dynamic image capturing device1. Monocular industrial camera 2. Camera bracket 3. Rod 4. Shaft 5. Bearing seat 6. Support frame 7. Electric motor 8. Electric motor bracket 9. Coupling 10. Bearing seat 11. Crank 12. Link 13. Angle sensor

| Parameter | Numerical or description |

|---|---|

| Sensor type | CMOS, global shutter |

| Pixel size/( | |

| Target surface size | |

| Resolution | 1 280×1 024 |

| Maximum frame rate/fps | 116 |

| Data interface | GigE |

| Power/VDC | 12 |

| Dimensions/(mm×mm×mm) | 29×29×42 |

Table 1 Technical parameters of MV-CA013-20GC industrial camera

| Parameter | Numerical or description |

|---|---|

| Sensor type | CMOS, global shutter |

| Pixel size/( | |

| Target surface size | |

| Resolution | 1 280×1 024 |

| Maximum frame rate/fps | 116 |

| Data interface | GigE |

| Power/VDC | 12 |

| Dimensions/(mm×mm×mm) | 29×29×42 |

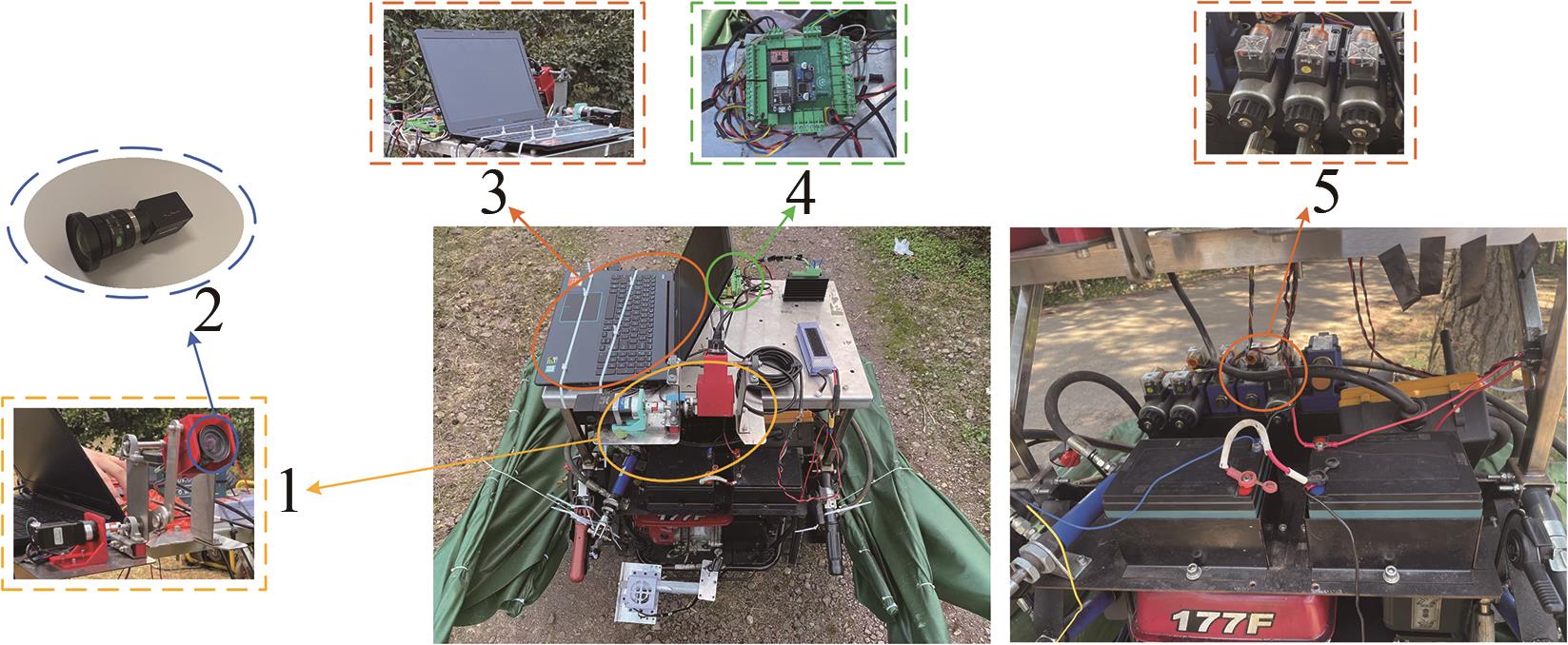

Figure 12 Crawler-type fruit collector1. Dynamic image capturing device 2. Industrial camera 3. Upper computer controller 4. STM32 lower computer 5. Steering solenoid valves

| 1 | VERBIEST R, RUYSEN K, VANWALLEGHEM T, et al. Automation and robotics in the cultivation of pome fruit: Where do we stand today? [J]. Journal of Field Robotics, 2020. |

| 2 | MARINOUDI V, CLAUS G. SØRENSEN, PEARSON S,et al. Robotics and labour in agriculture. A context consideration [J]. Biosystems Engineering, 2019, 184: 111-121. |

| 3 | FUMIOMI T, WEI Y, CHANGYING L, et al. Applying new technologies to transform blueberry harvesting [J]. Agronomy, 2017, 7(2): 33. |

| 4 | NAVAS E, ROEMI FERNÁNDEZ, DELIA SEPÚLVEDA, et al. Soft grippers for automatic crop harvesting: A review [J]. Sensors, 2021, 21(8): 2689. |

| 5 | YANG Q, DU X, WANG Z, et al. A review of core agricultural robot technologies for crop productions [J]. Computers and Electronics in Agriculture, 2023, 206: 107701. |

| 6 | LECHNER W, BAUMANN S. Global navigation satellite systems [J]. Computers and Electronics in Agriculture, 2000, 25: 67-85. |

| 7 | GAN-MOR S, CLARK R L, UPCHURCH B L. Implement lateral position accuracy under RTK-GPS tractor guidance [J]. Computers and Electronics in Agriculture, 2007, 59: 31-38. |

| 8 | LIAO J, WANG Y, YIN J, et al. An integrated navigation method based on an adaptive federal Kalman filter for a rice transplanter [J]. Transactions of the ASABE, 2021, 64(2): 389-399. |

| 9 | HELLSTRÖM T. Autonomous navigation for forest machines [J]. Pre-Study Report, Department of Computing Science, Umea University, Sweden, 2002. |

| 10 | LI M, IMOU K, WAKABAYASHI K, et al. Review of research on agricultural vehicle autonomous guidance [J]. International Journal of Agricultural and Biological Engineering, 2009, 2(3): 1-16. |

| 11 | BENGOCHEA-GUEVARA J M, CONESA-MUÑOZ J, ANDÚJAR D, et al. Merge fuzzy visual servoing and GPS-based planning to obtain a proper navigation behavior for a small crop-inspection robot [J]. Sensors, 2016, 16(3): 276. |

| 12 | OPIYO S, OKINDA C, ZHOU J, et al. Medial axis-based machine-vision system for orchard robot navigation [J]. Computers and Electronics in Agriculture, 2021, 185: 106153. |

| 13 | SHARIFI M, CHEN X Q. A novel vision based row guidance approach for navigation of agricultural mobile robots in orchards [C]// 2015 6th International Conference on Automation, Robotics and Applications (ICARA). IEEE, 2015: 251-255. |

| 14 | RADCLIFFE J, COX J, BULANON D M. Machine vision for orchard navigation [J]. Computers in Industry, 2018, 98: 165-171. |

| 15 | ZHOU M, XIA J, YANG F, et al. Design and experiment of visual navigated UGV for orchard based on Hough matrix and RANSAC [J]. International Journal of Agricultural and Biological Engineering, 2021, 14(6): 176-184. |

| 16 | MALAVAZI F B P, GUYONNEAU R, FASQUEL J B, et al. LiDAR-only based navigation algorithm for an autonomous agricultural robot [J]. Computers and Electronics in Agriculture, 2018, 154: 71-79. |

| 17 | BLOK P M, SUH H K, VAN BOHEEMEN K, et al. Autonomous in-row navigation of an orchard robot with a 2D LIDAR scanner and particle filter with a laser-beam model [J]. Journal of Institute of Control, Robotics and Systems, 2018, 24(8): 726-735. |

| 18 | HAN Z H, LI J, YUAN Y W, et al. Path recognition of orchard visual navigation based on U-Net [J]. Transactions of the Chinese Society of Agricultural Machinery, 2021, 52(1): 30-39. |

| 19 | BI S, WANG Y H. Inter-line pose estimation and fruit tree location method for orchard robot [J]. Transactions of the Chinese Society of Agricultural Machinery, 2021, 52(8): 1-26, 39. |

| 20 | YANG Y, ZHANG Y L, MIAO W, et al. Accurate identification and location of corn rhizome based on faster R-CNN [J]. Transactions of the Chinese Society of Agricultural Machinery, 2018, 49(10): 46-53. |

| 21 | BLOK P M, VAN BOHEEMEN K, VAN EVERT F K, et al. Robot navigation in orchards with localization based on Particle filter and Kalman filter [J]. Computers and Electronics in Agriculture, 2019, 157: 261-269. |

| 22 | BERGERMAN M, MAETA S M, ZHANG J, et al. Robot farmers: Autonomous orchard vehicles help tree fruit production [J]. IEEE Robotics & Automation Magazine, 2015, 22(1): 54-63. |

| 23 | SHALAL N, LOW T, MCCARTHY C, et al. Orchard mapping and mobile robot localisation using on-board camera and laser scanner data fusion-Part B: Mapping and localisation [J]. Computers and Electronics in Agriculture, 2015, 119: 267-278. |

| 24 | MA Z, YIN C, DU X, et al. Rice row tracking control of crawler tractor based on the satellite and visual integrated navigation [J]. Computers and Electronics in Agriculture, 2022, 197: 106935. |

| 25 | HAN Leng, HE Xiongkui, WANG Changling, et al. Key technologies and equipment for smart orchard construction and prospects [J]. Smart Agriculture, 2022, 4(3): 1-11. |

| 26 | LIU Limin, HE Xiongkui, LIU Weihong, et al. Autonomous navigation and automatic target spraying robot for orchards [J]. Smart Agriculture, 2022, 4(3): 63-74. |

| [1] | LI Jianping, LI Shaobo, YANG Xin, ZHANG Kuo, XIE Jinyan, LIU Shuteng, JIANG Zunhao, ZHANG Zhu, WANG Peng. Research progress on intelligent mechanization technology and equipment for apple orchard production management [J]. Journal of Intelligent Agricultural Mechanization, 2024, 5(4): 66-83. |

| [2] | FENG Shuang, ZHANG Zhaoguo, SUN Lianzhu, WANG Fa'an, XIE Kaiting. Research on deflection angle measurement system of tractor guide wheel based on GNSS/INS [J]. Journal of Intelligent Agricultural Mechanization, 2024, 5(2): 33-41. |

| [3] | LIU Yang, LIU Yuyang, CHEN Jiangchun, LI Jingbin, LIN Xiaowei, LIN Guangzhao. Research status and trend of key technologies of standardized orchard multi-functional operation platform [J]. Journal of Intelligent Agricultural Mechanization, 2024, 5(1): 31-39. |

| [4] | Zhang Lei, Liu Yiting, Chen Guangming, Li Peijuan. Research on navigation and rectification of inspection robot based on ultrasonic sensor [J]. Journal of Intelligent Agricultural Mechanization (in Chinese and English), 2022, 3(2): 64-70. |

| [5] | Yongkui Jin, Xinyu Xue, Zhu Sun. Design and application of management and control system for integration of water and fertilizer in orchard* [J]. Journal of Intelligent Agricultural Mechanization (in Chinese and English), 2020, 1(2): 19-25. |

| Viewed | ||||||

|

Full text |

|

|||||

|

Abstract |

|

|||||